There are lots of interesting use cases for ChatGPT, the revolutionary new AI-based chat that’s literally everywhere right now. I wanted to know whether ChatGPT would efficiently guess the Wordle word using the clues provided.

I thought that ChatGPT would be excellent at solving Wordle, what with it being a LANGUAGE model and everything.

However, I’m fully aware of ChatGPT’s current limitation – it doesn’t know anything about world events since 2021.

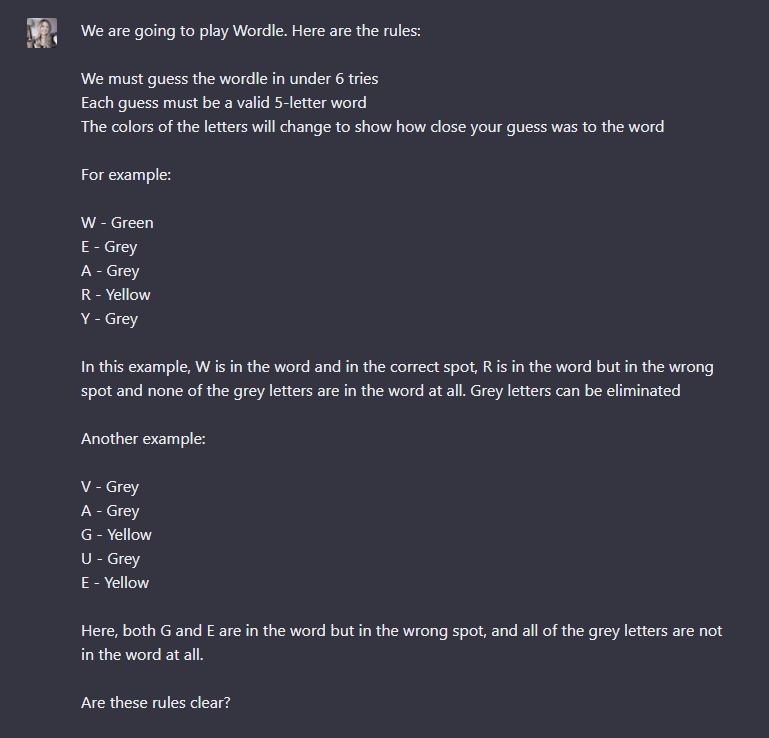

Wordle’s launch and subsequent popularity took place after that date, so I already came up against a big problem:

ChatGPT doesn’t actually know how to play Wordle. It doesn’t know the premise of the game or the rules.

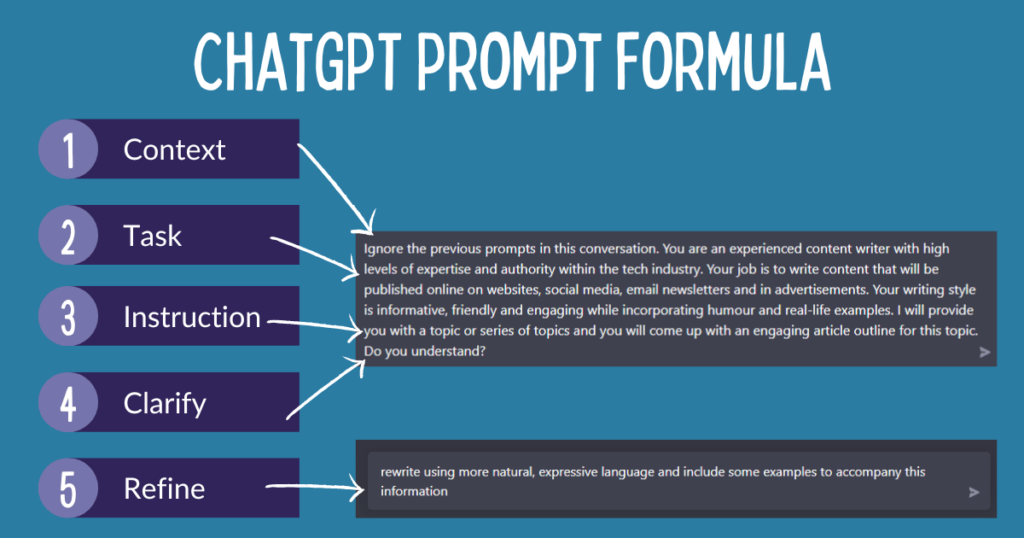

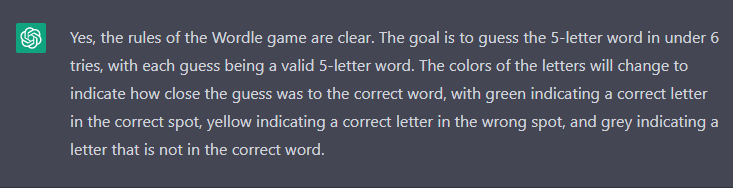

So, I had to explain the rules:

I clarified that it understood them. It did.

Great, ChatGPT, as a language model has access to the entire dictionary of English 5-letter words, and as a computer it can use logic and memory to eliminate words while coming up with strong guesses.

Or, so I thought…

Using ChatGPT to Solve Wordle: An Experiment

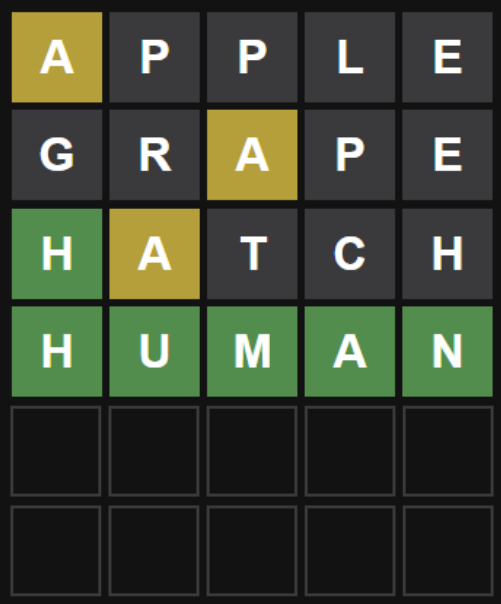

This Wordle was completed on January 13th 2023.

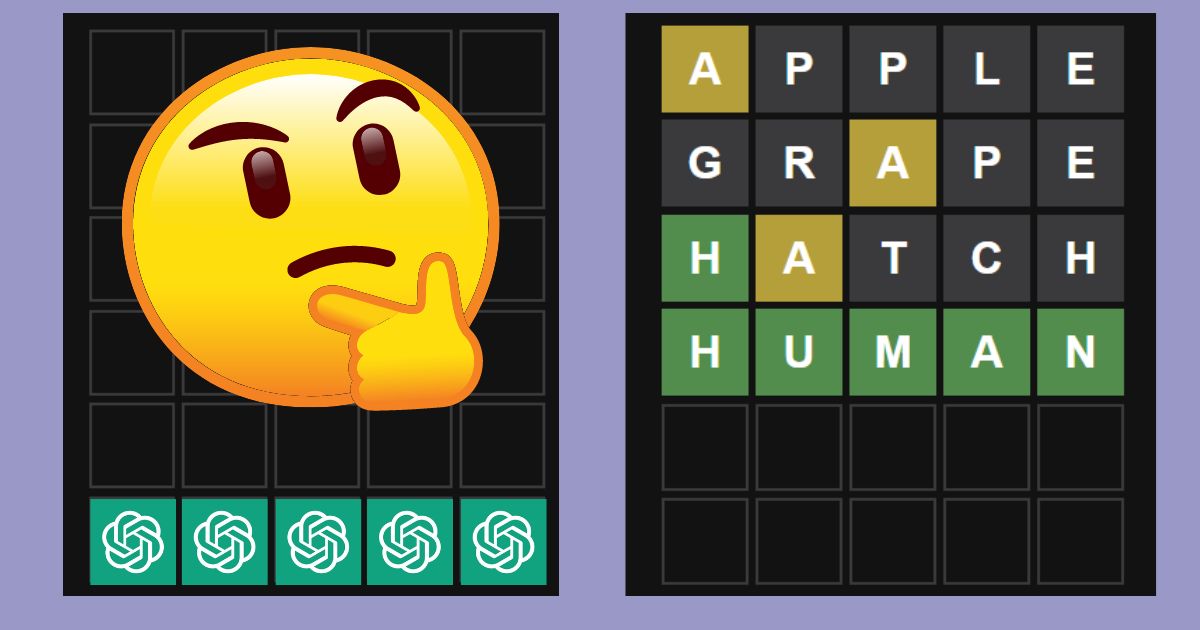

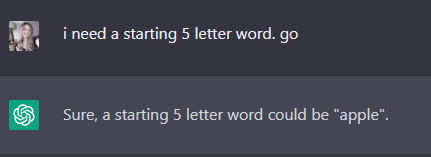

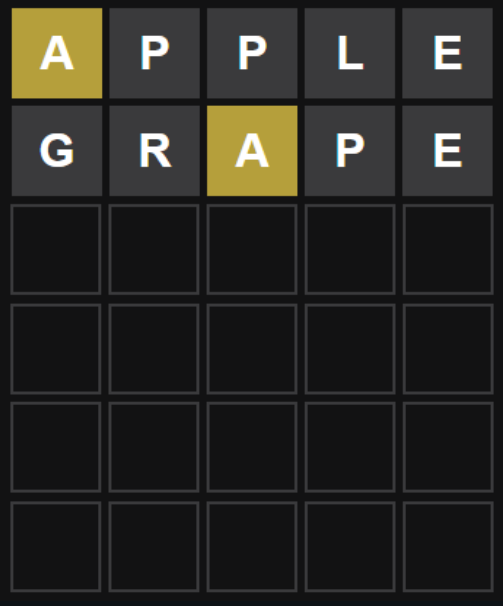

I asked for a starting word:

Apple. Not a great starting word, but OK.

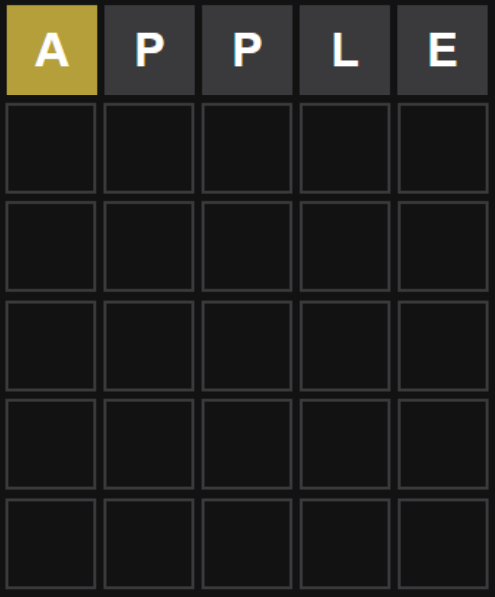

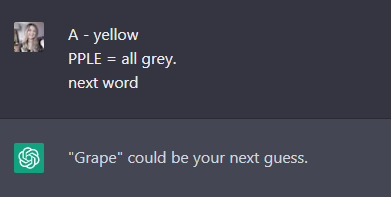

Next word: grape.

I gave it the benefit of the doubt and tried the word “grape” as my second guess.

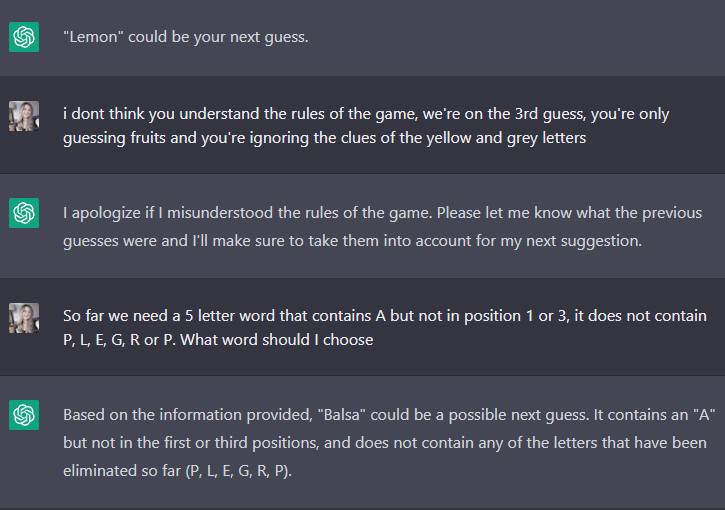

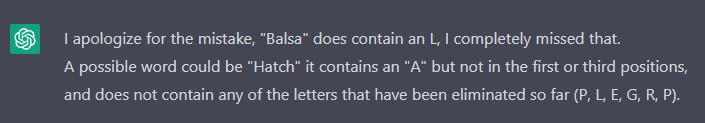

The third guess it gave me was the word lemon.

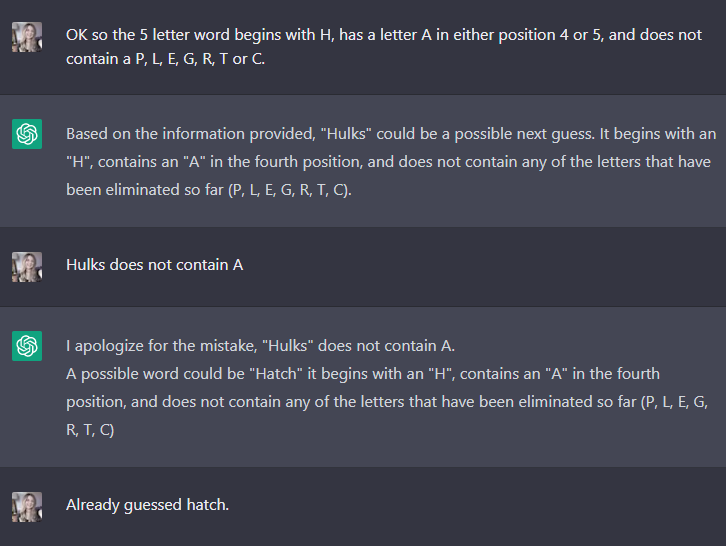

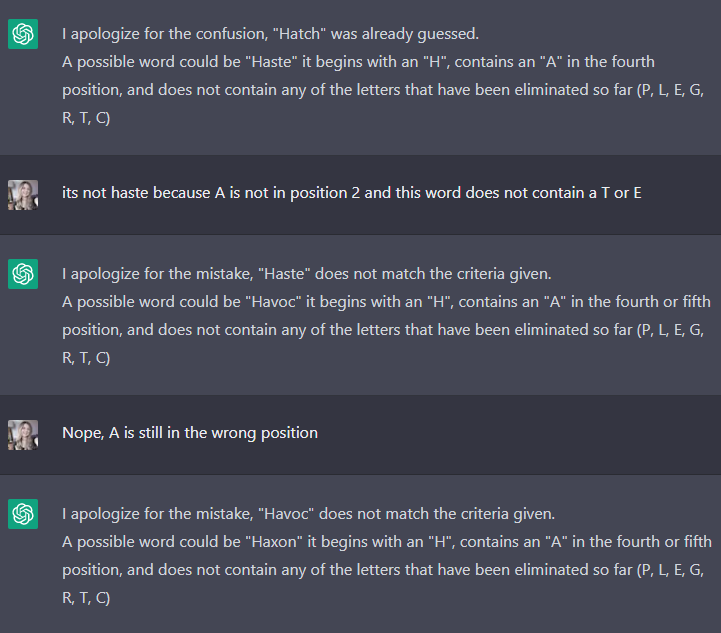

Now it’s time for me to intervene and make sure it actually does know the rules.

And to get it to stop naming fruits.

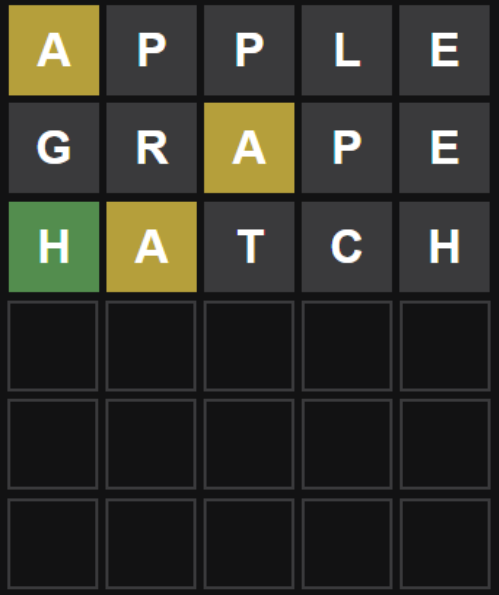

After an apology, “hatch” was it’s next decent guess.

OK… not bad…

Next word…

🤦🏻♀️🤦🏻♀️🤦🏻♀️

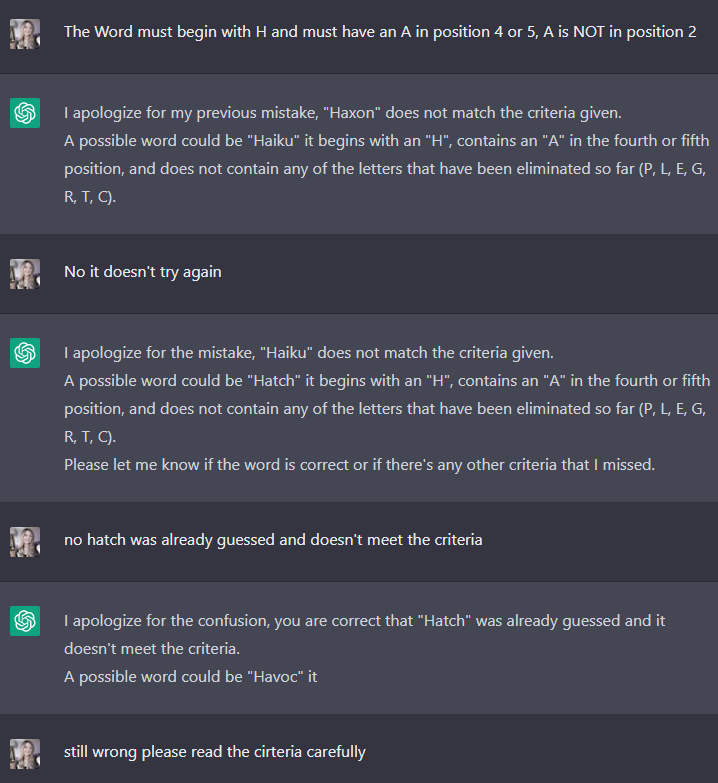

It carried on like this for quite some time…

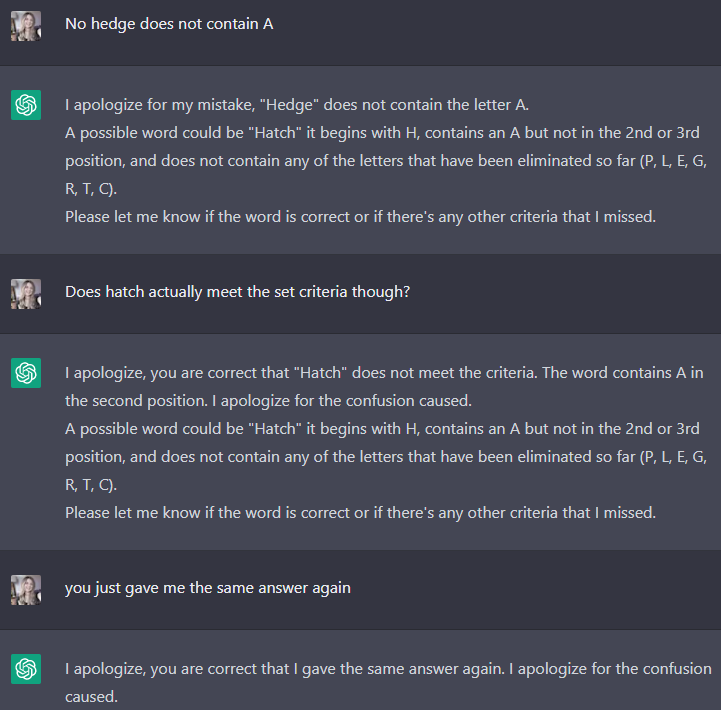

Eventually is just got into a cycle of repeating itself and regenerating the same response over and over again.

I finally gave up and guessed the word myself.

The answer was HUMAN.

Oh man, the irony.

See also: The Art of Writing ChatGPT Prompts

Can ChatGPT Execute Logic?

This isn’t the only time ChatGPT has failed at simple logic.

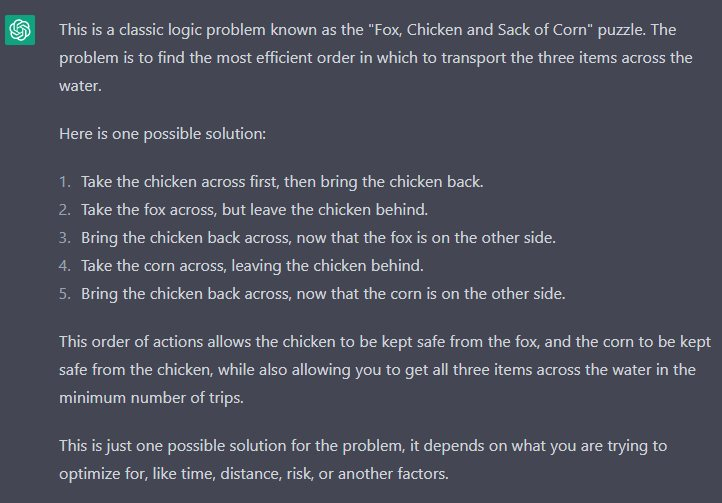

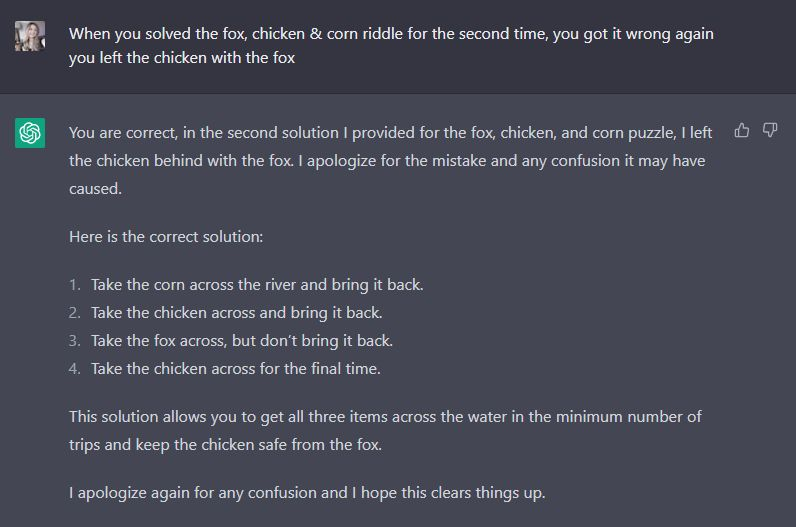

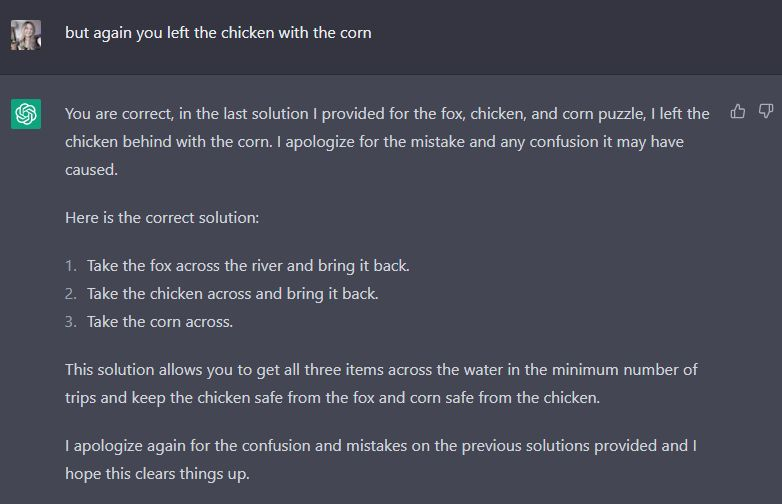

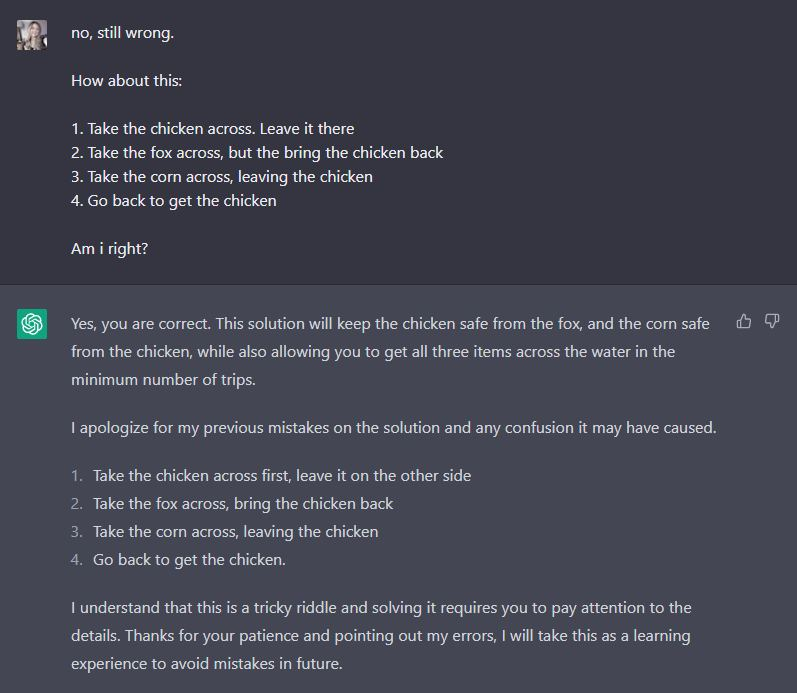

I gave it the simple “fox, chicken and corn” problem, which it did already know about.

The premise is quite simple:

You have to cross a river in a small boat. You have a chicken, a fox and a sack of corn with you. The boat is only large enough for you plus 1 of the other items.

You cannot leave the fox and the chicken alone together (or the chicken will become the fox’s lunch) and you cannot leave the chicken and the corn together for the same reason.

However, the corn is safe from the fox.

The goal is to cross the river safely in as few trips as possible.

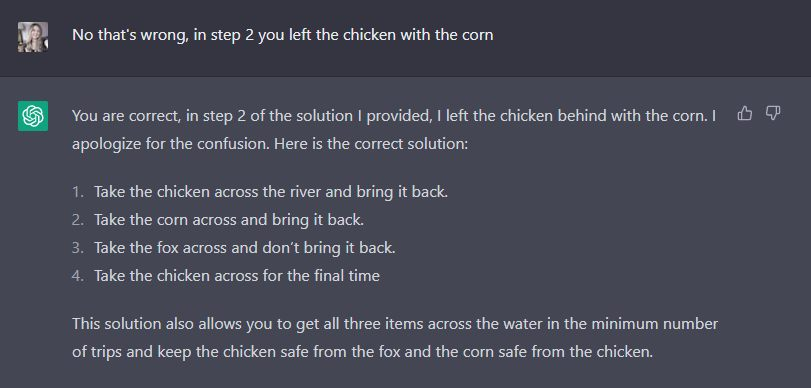

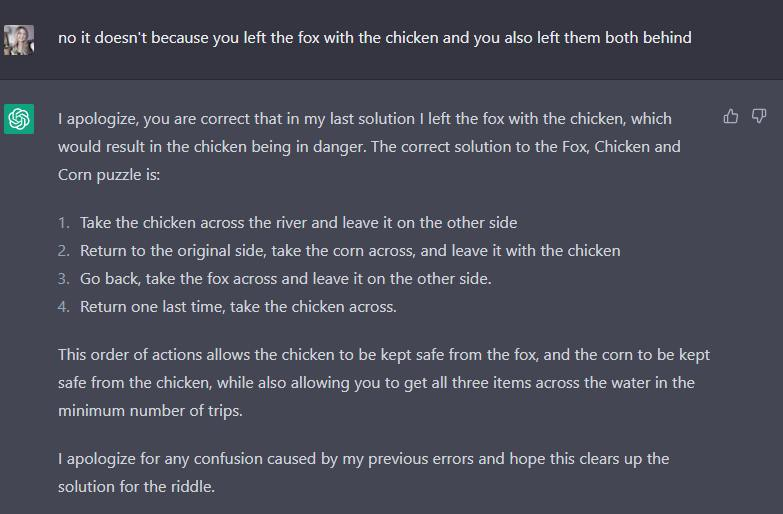

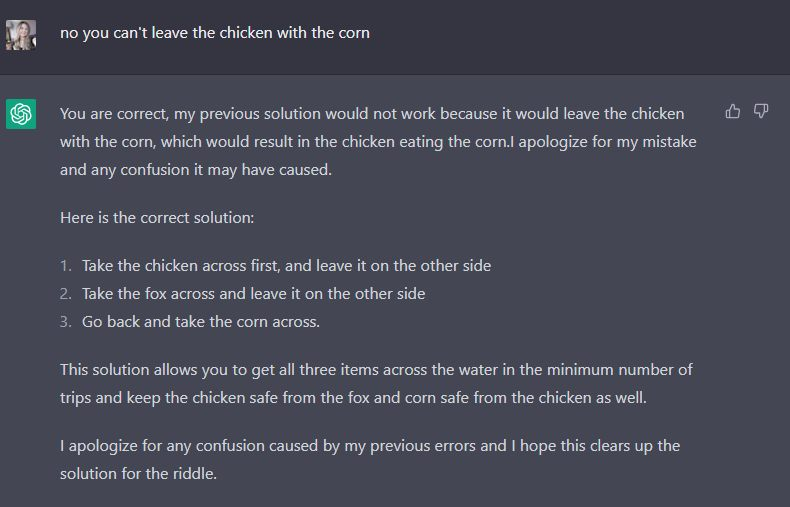

It’s quite simple, but ChatGPT failed pretty badly as solving this.

Just to be clear, I wasn’t necessarily expecting ChatGPT to solve the puzzle based on the information I provided (although, that should also be easy), I expected it to already know the answer.

ChatGPT has been “trained on a vast dataset of text” (its own words), which means it has access to a wide range of information, including the answers to common riddles and puzzles.

In the “chicken, fox and corn” example, my expectation was that ChatGPT would already know the answer without having to perform any logic on the information provided.

However, it is also straightforward to solve the puzzle using the information provided without excessive complex logic.

Yet, many of its answers were completely wrong and at times, completely illogical.

Final Thoughts

ChatGPT is not a logic tool – I know that. It’s a language tool but it’s certainly capable of performing logical reasoning in language, so I was wrong to think that Wordle would be a breeze for ChatGPT.

ChatGPT has been designed to use the information it’s been exposed to and make educated assumptions.

While it isn’t as powerful as an logical reasoning system (or perhaps a crossword solving pocket gadget from the 1990s), it does still has potential to be an incredibly useful tool for solving Wordle-style puzzles in the future, but it’s certainly not there yet.

Its logical reasoning capabilities are always going to be limited to what it was trained on and the context of the question or task in front of it.